Get Tech the Hell Away From the Trolley Problem

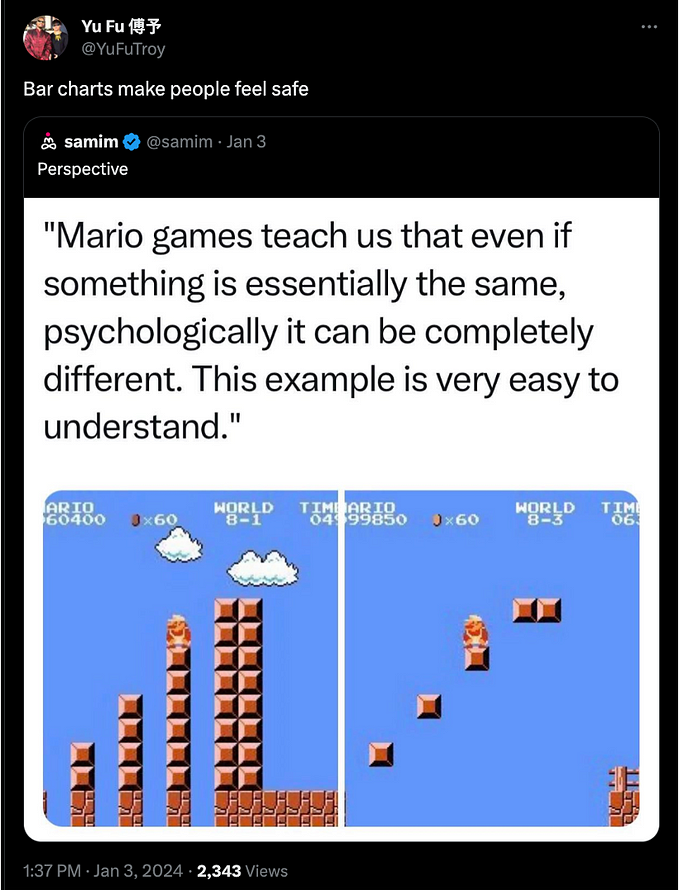

This post will largely be about the MIT Media Lab’s “Moral Machine” work, after a brief detour. If you don’t recall, the Moral Machine was a series of crowdsourced experiments where people were presented with a dilemma in which there is an autonomous car with, say, malfunctioning breaks, careening down a street, and we are given the choice to either let the car continue (striking and killing the pedestrian(s) crossing the street in front of us) or swerve out of the way (striking and killing pedestrian(s) on the side of the road), in essence a version of the famous Trolley Problem. The fact that it’s a “smart car” rather than a switchman pulling a lever is taken to be a Big Deal, somehow. The experimenters present various social or demographic features to the people crossing or on the roadside (pregnant, criminal, caucasian, drug user, etc. etc.). This work was covered in the technology press with headlines like “Should a self-driving car kill the grandma or a baby? Depends on where you’re from” and “A Study on Driverless-Car Ethics Offers a Troubling Look Into Our Values.”

I really, really dislike this work (yes, even the follow-on sort of apologia work that still manages to double down on a lot of the things I dislike), and the ways I dislike it cover a lot of bases for what I think is wrong in how “tech ethics” is positioned within tech and undertaken by STEM scholars and engineers. I’m far from the first person to voice objections about this project. Russell Brandom thinks that “the test is predicated on an indifference to death.” Brendan Dixon believes that it “exposes the shallow thinking behind the many promises made for artificial intelligence” and “presents caricatures of real moral problems.” Abby Everett Jacques calls the Moral Machine “a monster” that misleadingly elides responsibility for making policy decisions (that are intended to be social and universal) by using the language of individual choices.

The issues I will be raising in this post take a different form, but do require as a basis that you think that the Moral Machine project is, as executed, Not Great. In particular, I am sort of going to take it as read that you agree with at least some form of the following premises, and then move on to other matters:

- The Trolley Problem is not close enough, analogically, to the sorts of moral decisions that we would have to decide upon (implicitly or explicitly) when designing, engineering, or legislating aspects of autonomous vehicles. It leaves large parts of the ethical space totally unexplored. Among other questions, what do we do about the fact that our computer vision algorithms are notoriously inaccurate and biased on racial lines (including, err, a bias that means that autonomous vehicles might totally fail to see the pedestrians upon which the Trolley Problem depends)? How do we manage the social and ecological footprint of all the labor and materials that go into an AI system? What mechanisms of reporting, oversight, and redress should we create when an algorithmic system results in harm? The Trolley Problem doesn’t give us those answers.

- Even if the Trolley Problem were a good match, “asking a bunch of people what their intuitive, off-the-cuff judgments are about this particular thought experiment” is neither necessary nor sufficient to design an “ethical” machine, in the same way that asking a bunch of crowd workers with no medical training to design a cure for cancer is unlikely to be successful. We assume that thinking about ethical systems in the abstract requires expertise and systemization (or at least a sort of acquired phronesis). We look to, e.g., activists and philosophers and religious figures for moral guidance, not to a spreadsheet of crowdsourced responses . That a group holds a particular unreflective moral intuition is not sufficient evidence for the justness of a particular decision, nor is it sufficient justification to reify those intuitions into policy.

We can quibble about how strong or weak I could make those premises before you start objecting, but at the very least I hope some reflection is sufficient to get you to agree “crowdsourcing answers to the Trolley Problem is not the best instrument for building some sort of moral computing architecture.” But I would like to go a step further and suggest that the fact that the Trolley Problem was used for this project at all should fill you with dread for how ethics are conceived in tech, both in academia and industry. If the Moral Machine is the horizon of how mainstream computer scientists and software companies think of ethical design, we’re probably doomed.

Some people have blamed the Trolley Problem itself, calling it “silly” and “neoliberal.” But I really do think that the problem itself is fine, it is just the context to which it has been applied that is setting off my alarm bells. Building up the right sense of dread here will require me going into the history of the Trolley Problem and why it was so emphatically the wrong thing to employ in this instance. To tell that story, I’m first going to tell you a story about the arguments around abortion (trust me, please). It’ll be messing around with the specifics and the timeline a bit for the sake of narrative license, so it’s not a strictly true story, but bear with me. We will get back to computers and capitalism in the end, I swear.

In the era of Roe v. Wade there was a lot of public discussion about the ethics of abortion, but from the philosophical side it can be a bit of a non-starter, really. It’s all to easy to reduce the problem to one about personhood and heap paradox-style argumentation about when personhood “kicks in,” which doesn’t really seem decidable at a societal policy level (we’d expect people to differ on their personal decision boundaries here) and also elides the many other values at play in the abortion debate, like bodily autonomy and consent.

Judith Jarvis Thomson’s “A Defense of Abortion” attempts to cut through this particular Gordian knot through a series of famous thought experiments, most of which are pretty generous in their definition of personhood. I was always partial to the “people seeds” thought experiment (if floating “people seeds” waft around outside and occasionally take root and grow if they get inside my house, am I consenting to raising a child if I occasionally like fresh air but know that my window screens are sometimes defective?). But the thought experiment that arguably made the biggest splash was the “violinist” scenario. Suppose you wake up having been kidnapped and attached via machine to a famous and talented violin player who was experiencing kidney failure. You were abducted because of your uniquely compatible kidneys. As such, if you don’t lie in bed, hooked up to the violinist, they will die. While almost everyone would agree it would be nice (or a supererogatory kindness) to remain hooked up (for, say, nine months, or a year, or the rest of your life) to the violinist, it’s hard to say that you have a moral obligation to do so. You didn’t consent to supporting the violinist, and it would be hard to blame you for unplugging yourself and moving on with your life, even though nobody at all is confused about whether this violinist “counts” as a person.

People have been arguing about “A Defense of Abortion” ever since, but one of the clear points of contention is the degree to which the violinist thought experiment is analogous to abortion. In particular the distinction between killing and letting die. If you ask me “would you like to be hooked up to this violinist?” and I say “no, thank you,” then that seems like a different sort of action than actively unplugging yourself from the violinist once you have been attached (even though it was without your consent). The first is letting die, and our intuitions about moral duties in that case seem to be more relaxed than the latter case, where it seems like we are “actively” killing.

Killing and letting die are connected to what is called the “doctrine of double effect,” which holds that it might be permissible to do a bad thing if it is a side effect of an intended good action, where it would be impermissible to do the same thing as the intended action. Under this doctrine, if I defend myself from somebody attacking me (with the intent only to escape or subdue my attacker) and they accidentally die as a result of my self-defense, that is a dramatically different action than if I set out to murder an enemy, even if the result (one dead enemy) is the same. This sounds reasonable enough, but it creates some rather counter-intuitive conclusions in maximal-personhood violinist-style arguments. For instance, if a pregnancy is non-viable but is introducing complications that will also kill the person who is pregnant, then under this doctrine it would be impermissible to the terminate the pregnancy, as it would be setting out with the intent to kill the (non-viable) fetus, but it would be permissible to let both the fetus and the pregnant person die by doing nothing, since you have no intention to kill in that case.

In “The Problem of Abortion and the Doctrine of Double Effect,” Phillipa Foot proposes the Trolley Problem in its current form to specifically address this doctrine. In particular, she uses the thought experiment (and many others, such as whether we are allowed to blow up, with dynamite, a stuck person blocking a cave exit in order to save ourselves from rising water, or if a judge can frame an innocent man to spare a town from mob violence) to drive at a concept of positive versus negative duties that does not involve all of this fuzzy “intentions” and “side effects” business. Per Foot, we have certain positive duties to actively do things (for instance, we might have a duty to “take care of our parents”), and certain negative duties to refrain from doing things (for instance, “don’t murder people”). In the positive/negative sense, while it’s nice if I do so, I don’t have a positive duty to save someone. For instance, I’m not put in jail for murder for not giving enough in charity to prevent starvation in some other country. The Trolley Problem is just a case of a conflict of negative duties (“don’t let one person die if you can save them,” “don’t let five people die if you can save them”), who to rescue in a process I didn’t start: in that case pulling the switch is permissible, since once I’ve decided to rescue people, I should probably make sure I rescue the most people. Terminating a pregnancy to save the life of the pregnant person is taken to be a similar example of a conflict in negative duties: you didn’t put anyone in mortal danger, but, now that they are, shouldn’t you try to save as many people as possible?

So that’s the context of the Trolley Problem. It’s an (intentionally, as per the author) glib and somewhat fantastical thought experiment designed to get you to rethink a very particular point about moral duties, under a very specific moral framework, for the goal of speaking to a specific moral question (“when is abortion permissible?”) of real, non-hypothetical and non-fantastical, importance to society.

Lessons from this story:

- The Trolley Problem is just one of many thought experiments in moral philosophy. We don’t have eth-o-scopes or virtue p-values or anything like that, so the process of establishing the soundness of statements about ethics often involves thought experiments that are meant to highlight where particular doctrines or arguments fall short (in this case, the doctrine of double effect). It is in conversation with an ongoing area of ethical inquiry with a centuries-long pedigree. For instance, see Thomson’s rebuttal, which introduces a whole new set of thought experiments involving magic stones and nuclear weapons and mischievous schoolboys.

- The Trolley Problem is meant to interrogate very specific intuitions about killing versus letting die, as part of a wider effort towards determining our moral duties in those cases. You’re not supposed to build out your entire moral philosophy (or even your full opinions about abortion) from your answer to the Trolley Problem. In fact your “answer” isn’t really the point (Foot does not leave it up to the reader to choose their own adventure: she specifically states the permissibility of pulling the lever under different moral frames).

- The distinction between killing and letting die, and generally the Trolley Problem as a whole, only matters if you’ve signed on to one of a set of particular moral frameworks. If you’re a strict utilitarian, then you couldn’t care less about conflicts of duties, let alone levers and tracks: you just add up the utility of saving one group or the other and save whichever is greater. Really all of the language about duties and obligations points to this as specifically a dilemma meant for virtue ethics (or, I guess, certain flavors of deontologists or rule-utilitarians). And even if you are a virtue ethicist, you can still object to the use of the trolley problem as “proof” of a particular claim about negative duties (as Thomson did in later work).

- Everyone involved with the Trolley problem would think you were silly for using it as a practical foundation for a holistic moral theory. Physics students aren’t sent on excursions to kill cats when they learn about Schrödinger and quantum physics, or asked to exile their twins to space when they learn about Einstein and relativity. Seminarians aren’t asked to go outside and find the heaviest rocks they can find to see if God can lift them. It’s just an example of a famous thought experiment; it is neither a replacement for an education in ethics nor sufficiently axiomatic to allow you to re-derive the rest of the field ex nihilo.

Given these lessons, you can perhaps see why I am concerned about the fact that The Moral Machine relies so heavily on the Trolley Problem. To me it seems part of a particular flavor of disinterest, reductionism, or occasionally outright contempt for non-technical expertise that is common in tech. This is partially related to Morozov’s idea of “solutionism”: “recasting all complex social situations either as neat problems with definite, computable solutions or as transparent and self-evident processes that can be easily optimized,” but also tech “saviorism,” where tech assumes that it can jump in and fix things through computational or mathematical methods without taking the time to familiarize itself with the existing research. A recent example of this is when two physicists found epidemiology lacked “intellectual thrill” but nonetheless made a COVID-19 model that guided the University of Illinois’ pandemic plans (and appears to be failing to predict that, e.g., students might live or party together). But one does not have to look far to find examples of tech folks dramatically underestimating the complexity of the problems they are working with, and overestimating the utility of their standard methods for tackling them.

Under an admittedly uncharitable reading, The Moral Machine has this flavor of arrogance and dismissal. The Trolley Problem is perhaps one of the most famous “moral dilemmas” that somebody might have heard of and, well, it’s possible to get through a graduate program in computer science without knowing much else about the field of ethics. So it’s perhaps not such a large leap to assume that the Trolley Problem is at the core of ethical thought, and one that ethicists were probably just waiting on more data to finally “solve.” Or that it would be a useful and important “test case” to solve with crowd-working methods, with all of the other ethical dilemmas as follow-on work of incremental importance. There’s any number of glib analogies here, but the reciprocal scenario would perhaps be like a philosopher bursting into a computer science conference and insisting that they had solved the halting problem by claiming that Zeno’s paradoxes show that all movement is illusory, and so no program can ever be considered to “halt” or “run,” and thus all of these so-called “programmers” are just wasting their time and should be engaged in more productive matters, like contemplating the unitary Godhead.

Sadly, hypothetical Gnostic TED-talks aside, I have other concerns about the use of the Trolley Problem besides just providing yet another example of tech underestimating problems outside of its wheelhouse. As I mentioned, the Trolley Problem is a rather specific, purpose-built thing. A rhetorical guided missile, if you will. It is not (nor is it intended to be) an everyday moral decision that people have to make. So using something so extreme and narrow as the foundation of what you think a “moral machine” should look like is going to lead to bad outcomes. It reminds me a lot of when, in the process of debating the legality of torture in the horrors of the War on Terror, proponents (not just around water coolers but on the floors of congress and in Presidential debates) would bring up scenarios out of 24 or other jingoist mass culture, with fictitious bombs whose defusal required the immediate torture of equally fictitious unambiguous enemies of the State. It’s not how we should be making policy, from either the perspective of justice or even simple pragmatism. We absolutely should be reasoning about the ethical implications of the things we build, but I think these assessments should be grounded in a perhaps just a touch more empiricism than the Trolley Problem presents. E.g., what sort of autonomous vehicle would have sensors that would consult a perfectly calibrated system for assessing the moral worth of everyone around it before changing directions or speed? Who would buy a car knowing that it might decide, lemming-like, to plunge itself off the road as part of a moral calculus you had no access to? If you did buy one, what company would rationally insure you or your vehicle? Hell, the mere structure of the Moral Machine presupposes that we are going to be building privately owned autonomous cars, and the only ethical concern is the best way to design them: would it ever arrive at the conclusion that building the tech at all might be an ethical-non-starter, rather than looking for ways to make a bad system “fair?”

But pragmatism aside, as I mentioned way up in the first paragraph, there’s nothing particularly technical about the Moral Machine work. You could have conducted similar research via telegram or Pony Express and written about how we ought to “morally” design steam locomotives or what have you a century or two ago, with the only caveat being you might have to do all of that cumbersome statistical analysis by hand, and your policy suggestions might be instantiated in a gearbox or a rail signal design rather than in code. But by framing this work as being specifically how we design algorithms or artificial intelligences or what have you, I am afraid that it part of an ongoing process to elide moral responsibility for what an algorithm might do in your name. There is already ongoing policy work to shield organizations from the prejudicial actions of the algorithms that control decision-making; this work feels like another potential step in that direction. You can see it even in the press coverage: the takeaways are about how biased or racist or sexist or ableist people’s judgments were for this task. Rather than try to design a just or equitable autonomous vehicle, a designer might conclude that their job is to better reflect the specific prejudicial priorities of the culture that the car will be deployed to, like the Oscar Mayer factory subtly adjusting the flavorings for east coast versus midwest hotdogs, except here it’s how often your car will hit an elderly person.

A natural response to my concerns is that the Moral Machine work is just a first step in a larger project of determining how to build an ethical autonomous system, a proof of concept that it is possible and practical to solicit ethical “user requirements” that we can then integrate or ignore in later, more ethically complex system building. Hopefully my history lesson shows why the Trolley Problem is not the right choice for those kinds of “first steps” (even assuming, for the moment, that the question of “how do we ethically build algorithmic systems?” wasn’t already being tackled for decades by full-fledged fields like Science and Technology Studies and Human Computer Interaction). For one, it’s not an “atomic” dilemma that can be used to unlock a host of other ethical projects, but a dilemma that has required you to sign up for a whole host of ethical and epistemic commitments already. So jumping in midway without context is not likely to be helpful. Secondly, collecting empirical data on the Trolley Problem is a category error (even worse than the old canard that “writing about music is like dancing about architecture”): the Trolley Problem is not an open empirical question for which ethicists were waiting on more data, and it’s a methodological mismatch to assume otherwise.

As a reward for putting up with my complaining, I will leave you here with a caveat: I do not intend this piece to serve as a marker of a turf war, telling computer scientists to stay out of philosophy’s territory if they don’t want any trouble (as a computer scientist myself, this would be a bit self-defeating). For one, moral philosophy has its own troubles in this area: it’s an open question if “ethics” is even the right framework for addressing many of these tough tech+justice problems: the focus on individual actions versus social policy, ideal conditions versus lived and situated experience, on finding what the “right” action is versus pragmatically looking at how to achieve a just outcome in an unjust society: all of these are tough problems that ethics as it is traditionally conceived of or taught can struggle with. The solution for the alleged superficiality of ethical reflection in tech is not going to be to force people to read a few old papers and call it a day, but long and equitable collaborations (between disciplines but also between populations).

Secondly, I want people in tech to be doing more reflection, not less. But I want people to know that this is a hard problem. It requires research and reflection. It’s not something you can knock out with a module or two at the end of an introductory computer science course, or with a single study or paper. I often feel like in tech ethics there’s a sense of sympathetic magic at play, that we feel we are absolved of our obligations so long as we know that there are one or two people working away at “the problem” somewhere else. We’re all of us making systems that impact people’s lives now, not only in some speculative general AI future. It’s time we act like it. That means putting away toys like the Trolley Problem and doing real work with (rather than merely just about) the populations we expect will be impacted by our tools, and working on righting ongoing wrongs rather than working on our mea culpas to be given after our negative consequences catch up with us.